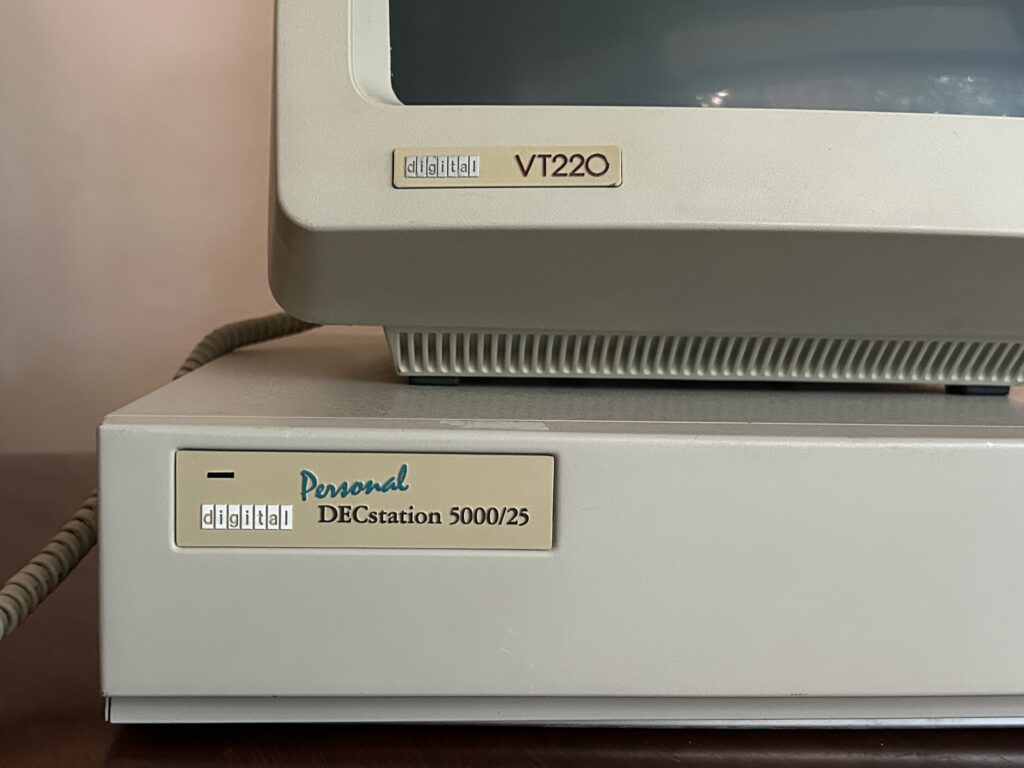

I came across this machine a year or two ago in the “free pile” of our monthly meeting of local Retro Computing enthusiasts. At the meeting, we hooked up a serial line to it , turned it on and found it booted to Ultrix 4.2…It then proceeded to sit on my shelf waiting for me until last week.

What is it? It’s a Personal DECstation 5000/25 from Digital Equipment Corporation (DEC). This means it’s got a 25 MHz R3000 MIPS processor. This baby is maxed out with 40 MB of Ram, with what I assume is it’s original 200 MB SCSI hard drive.

After a successful minor resurrection of a Sun SparcStation 2 recently, I was feeling confident and wanted to compare these contemporary competitors. The “problem” with a 90’s UNIX workstation from a major vendor is that, out of the box, it’s a pretty boring experience. Outside of the (mostly) standard set of UNIX tools, it doesn’t DO anything. For that you need, some software. This is really no different from any computing platform. This big difference is that the software packages for these tended to be either bespoke software written for a particular purpose, or very expensive and licensed commercial packages.

Actually, in this respect the DECstation is in far better shape than the Sun, as DEC was pretty serious about it’s Layered Products offering, which was useful software you could license.

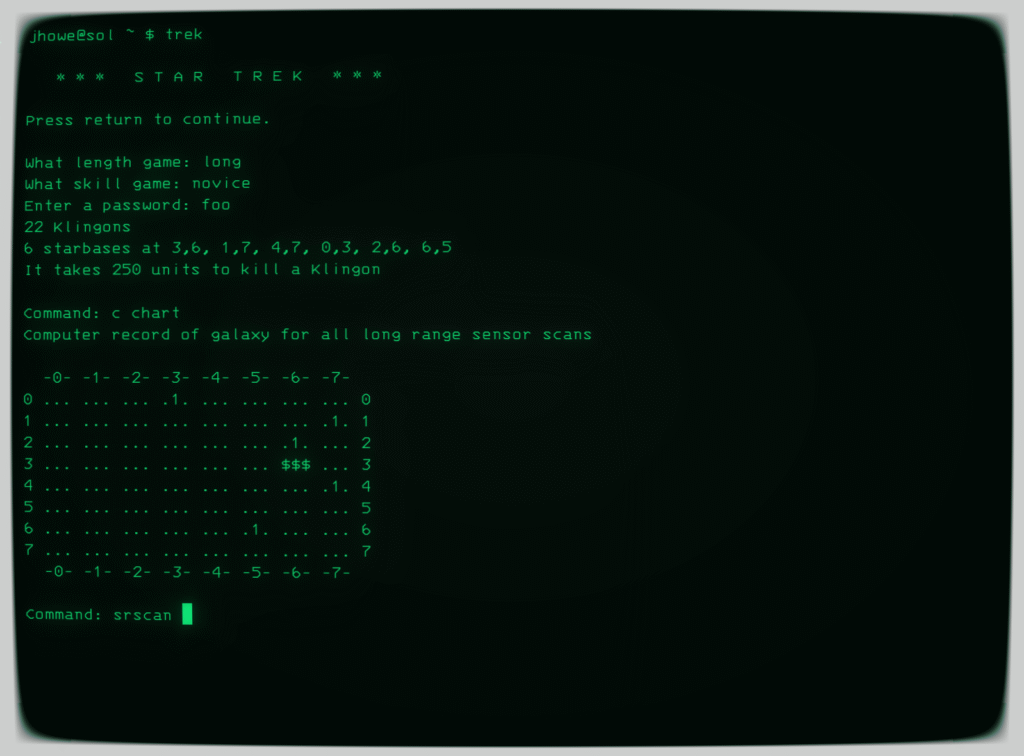

But, I figured I’d like to keep it simple and get some basic terminal based software on the DECstation to scratch the itch and have this 30+ year old machine doing something that I found useful. Email, newsgroups, irc. That seemed like a reasonable and attainable challenge.

So, the first thing you realize is that pretty much any bit of freeware written is expecting a GNU userspace, at the very least this means gcc, also usually things like binutils and m4. At least that was the the baseline I needed to start. I started with gcc 2.2.2, that was old enough where I figured it would build with the stock C compiler — and it did.

I then tried building things like pine, slang, slrn, and ircII. ircII just kind of worked, but the others gave me fits about missing tools, incompatibilities with grep, syntax that gcc 2.2.2, just couldn’t handle, etc. So I build gcc 2.7, that didn’t really help, so went ahead to 2.95. That also didn’t materially change the errors I was running into, though I was able to compile PICO and PINE! With some configuration and an ssh tunnel out to my mail server on my local network, I was able to check and send email! Alright!

Slang and SLRN were posing a bigger issue for me though, I just couldn’t get the older version of the software contemporary with this machine and toolchain to work properly, and I couldn’t get the more modern versions to build at all

I was working through the issues though, on my way to getting the machine configured exactly the way I wanted. Then disaster struck. You may be thinking that the 30 year old 200MB SCSI hard drive crashed…and you would be only partially correct. We had a lightning strike in the neighborhood which tripped a GFI breaker upstream of where the DECstation was plugged in, turning it off immediately in the middle of a compiling something. You could fault me for not having it on a UPS, but as I wasn’t home as the time, it would have mattered little. It is on a surge protector, at least.

The next day, when I fired it up, it wouldn’t boot. The boot loader loads, but then it would hang at some point in the process and never progress. I fought for two days with various version of NetBSD, to try and fsck the root volume to no avail. Oh, it found errors and claimed to fix them, but the boot behavior was the same. So, I said screw it, and decided to Install Ultrix 4.5, the final release, which was also just a little more modern that 4.2A.

The problem, is that the CD-ROM ISO for the 4.5 installer that’s out there, is broken in some fundamental way. It boots okay, however when you enter the installer program, it probes all the drives on the machine so you can select the destination drive….and it crashes when it probes itself, complaining about block size. I burned several CDs, of different copies of the iso I found around the Internet, in 3 different SCSI CD-ROM drives ( I was actually super impressed that I have 3 WORKING SCSI CD-ROM drives in the house), but the behavior was the same. I had a friend test the images and he was getting the exact same behavior even when booting the image from a SCSI emulator — at least that confirmed it was an image problem and not a hardware issue on my end.

He also discovered a workaround, if you set the image type in the emulator to “HardDrive” instead of “CDROM”, it worked. I borrowed his SCSI emulator and after some amount of time decompressing things and laying down bits on disk, I had a fresh ULTRIX 4.5 install!

Another motivation for going for ULTRIX 4.5 is that I found a bunch of pre-compiled binaries of gnu tools for it, along with DEC’s Year 2000 Patch set.

So I started installing some gnu tools, gcc 3, m4, binutils, which all took about 10 minutes. IrcII built and installed without any errors or fiddling, as did PICO, PINE, slang and slrn — holy moly!

I did run into a minor issue when installing the Y2k patch set — I had some environment variable not defined exactly correctly and botched the copy of files. I ended up having to read through the script line by line and manually verify what it did and did not do. Tedious, but not the end of the world.

Coming back to newsgroups and slrn for a moment, I was surprised that the behavior I was seeing in this build was the same. The install script for slang and slrn are a little goofy and end up putting things in the wrong spot, but I was 100% sure I had that all fixed up. But I still just could not get it to search for news groups to subscribe to from the server. The way I’ve setup slrn in the past is to start it, let it create a stub .jnewsrc file, fill out the .slrnrc file with your news server, username, passwd, etc, then load up the program, press ‘L’ to search for groups and subscribe to the ones you want. The current version of the program queries the news server for groups when you search.

It turns out the older version of the program DOES NOT DO IT THIS WAY. Instead, you need to be 100% sure your .slrnrc file is set before you start the program, then start it with the –create command, which creates a local cache of all the newsgroups on the server. When you press ‘L’, you’re searching through the local cache. This paradigm makes a lot of sense when you consider very slow machines and even slower network links. It did however through me for a loop until I stumbled upon a very very old forum post on the Internet which caused the light bulb to go off for me.

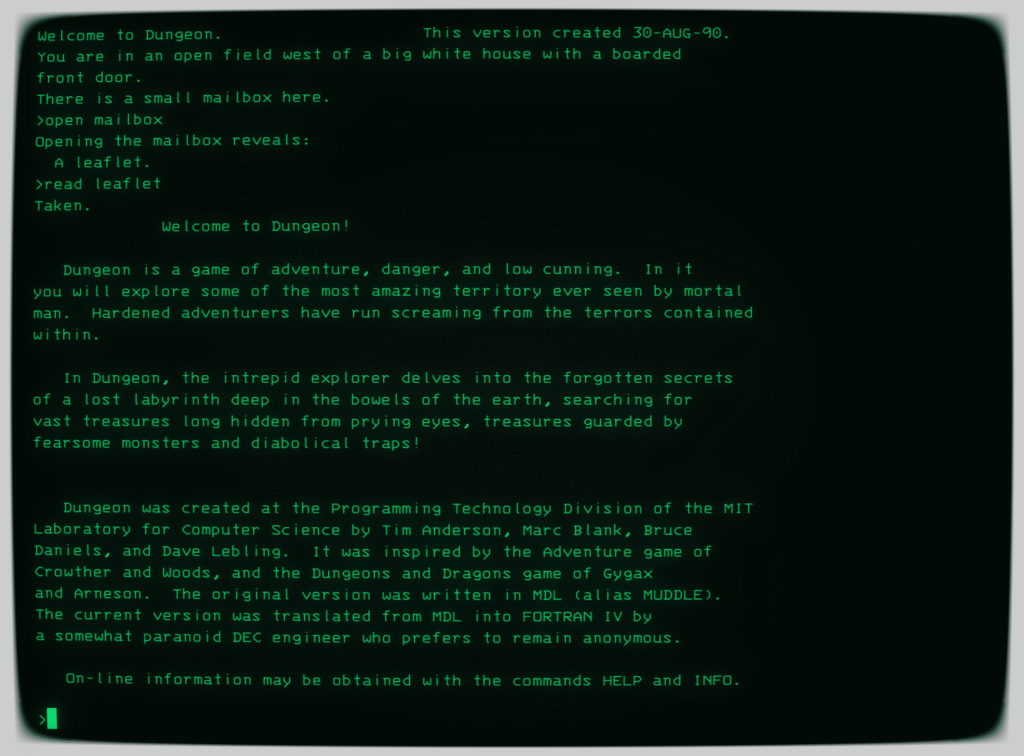

So, now I reading and posting to newsgroups with the machine as well. I even built vim 6.3 for a little bit more of a modern text editor — in fact I’m using it to write this entry. I’m currently trying to get ispell installed so I can spell check my terrible spelling with 25MHz of RISC fury….

— Sent from my Personal DECstation 5000/25